Streaming Random Forest

As promised in our previous blog post (Flavours of Streaming Processing), in this post we report the performance of our weapon of choice…

As promised in our previous blog post (Flavours of Streaming Processing), in this post we report the performance of our weapon of choice when it comes to classification on data streams: the Streaming Random Forest (SRF).

Random Forests were introduced by Leo Breiman et al in 2001. They combine two of the most powerful ideas in classification, decision trees and bagging, to represent a decision rule as a kind of majority vote over a large number of different decision trees that are generated probabilistically.

However, Random Forests scale poorly with the size of the dataset. This makes them impractical in streaming contexts: similarly to nearest neighbours, Random Forests need multiple runs over the full data history in order to update their predictions whenever a new datapoint arrives. Eventually, no matter how fast your hardware, Random Forests will become slower than your data arrival rate; and no matter how big your memory, they will outgrow it, at which point you will have to scale out. This is now possible using tools like Apache Spark MLib but the fundamental truth that the algorithm becomes slower over time remains true no matter how advanced the infrastructure.

Everything Changes

So one reason why one should switch to online Random Forests is speed and scalability. But the other is that decision rules that were valid last week might be obsolete today. An immediate “hack” is to retrain your classifier in regular intervals, using a fixed number of the most recent observations. This is a great quick fix, but it suffers from two drawbacks:

Your classifier is out-of-date most of the time (except just after retrains!)

The length of the window size is a critical parameter for performance.

To demonstrate this latter issue, we run an experiment on a dataset knownn as ELEC2 which has become a benchmark in the streaming data literature. Each record contains a timestamp, as well as four covariates capturing aspects of electricity demand and supply for the Australian New South Wales (NSW) Electricity Market from May 1996 to December 1998. Labels indicate the price change related to a recent moving average.

Below we report the error rate (lower is better) achieved by a sliding window implementation of random forests using the randomForest package in R, for 8 different window sizes (left group of bars in the Figure below). When the dataset is ordered by timestamp, the best performing window size is 100, on the lower end of the scale. This is classic case of “more data does not equal more information”: using 100 times more data (w=10,000 vs w=100) almost doubles (175%) the error rate!!

To drive the point home, we took the same data, but presented it to the classifier in random order so that it was no longer possible to take advantage of temporal effects. In this case, without any temporal effects, indeed the accuracy improved with larger window sizes.

The advantage that a well-calibrated streaming method can have over its offline counterpart in a streaming context is quite dramatic: in this case, the best-performing streaming classifier has an error rate of 12%, whereas a random forest trained on the entire dataset (minus 10% withheld for testing) achieves an error rate of 24%. A fraction of the data, double the accuracy !

So it seems that you can have your cake and eat it. Or can you? Well, you can only achieve this fantastic result if the window size is well-tuned. And that’s a tricky business… Set it too small, and you will have to learn everything from scratch every few observations. Set it too long, and obsolete patterns will start misleading you. And to make things work, a technique like random forest consists of a composition of thousands of decision rules, some of which may be getting obsolete faster than others.

Put simply, any model that runs on a live stream must be updated regularly, but there are fundamentally better ways to update a model than to rebuild it from scratch. A bit like a human, successful online learning algorithms recognise that they have a finite memory, and that some patterns “age” faster than others. The brute force of a sliding window cannot take you very far in real applications.

The Mentat SRF

Mentat’s Streaming Random Forest has three unique features:

It makes use of the cutting edge in online learning techniques to stay up-to-date by letting patterns “age” at different rates.

It has a fixed memory footprint: a model update operation takes the same amount of time whether the data has seen 10 datapoints or 10 million datapoints.

It utilises an “ensemble-of-ensembles” techniques, by combining a streaming self-tuning implementation of random forests with a number of other, simpler but highly agile classifiers. These classifiers have the benefit that they can pick up on short-term linear trends much faster than a complex technique like a random forest, while allowing the latter to extract deeper patterns from the residual signal.

Indeed, in the example above, the SRF tool achieves the same result as the best-performing window, without any manual tuning whatsoever. This performance is identical to the state-of-the-art in the literature (see a related publication by our Chief Data Scientist here).

Empirical Study: Detecting MalwareThe Kaggle competition of 1999 introduced a benchmark dataset for classification on large datasets. It considered five types of traffic:

Normal

Attack Type 1: Probe

Attack Type 2: Denial-Of-Service (DOS)

Attack Type 3: user-to-root

Attack Type 4: remote-to-local

This is an example of a multi-label classification problem: we don’t simply need to detect malicious traffic, but want to know what type of attack it is in real-time, so that we can take automatic action.

It was revealed that Probe and DOS traffic can be distinguished from normal traffic by very simple methods, such as a nearest neighbours classifier — the winning method only managed to improve accuarcy in these cases by a few percentage points. User-to-root and remote-to-local attacks were much more challenging, with the winning method achieving 92% and 87% error rate respectively — more than 8 out of 10 examples were misclassified in both cases.

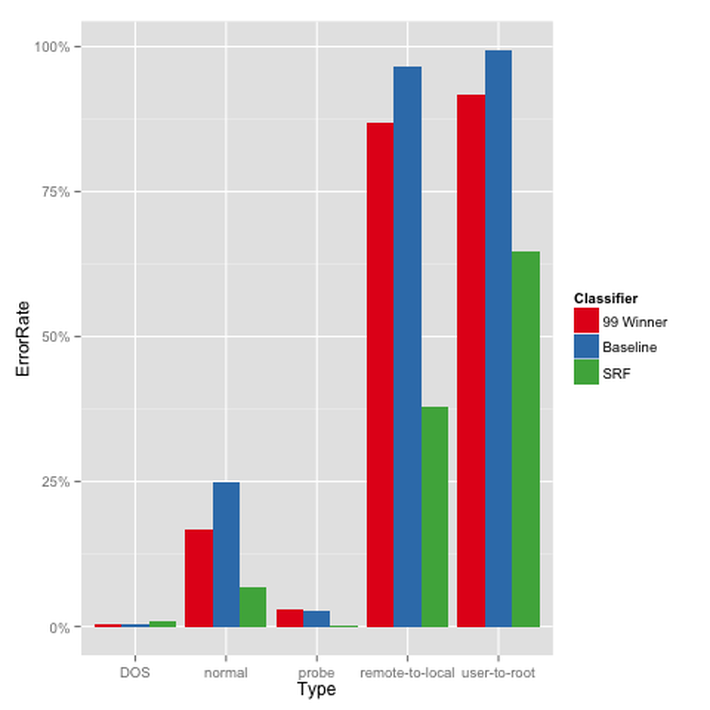

Below we compare SRF to the winning method, in terms of the error rate (lower is better):

When it comes to DOS and Probe there wasn’t much to improve upon — the baseline method already achieved error rates below 5%. However, for remote-to-local and user-to-root, the ’99 Winner improved upon the baseline significantly (red is lower than blue in the last two columns). SRF blows it out of the water, with less than half the errors for remote-to-local, and a 40% decrease for user-to-root. These improvements look even more impressive when one takes into account two things:

SRF was applied out-of-the-box to this dataset, whereas the 99 winner was bespoke.

SRF maintains a fixed memory footprint

Description of the API

The Mentat SRF has a very simple API, with three components:

A config file which describes:

the number of input features, and their type: categorical (e.g., a colour, or profession) or numeric (e.g., height).

the number of labels (e.g., “yes/no” versus “malicious/DOS/probe/…”).

Don’t worry, if you don’t provide a config file, we will guess.

A predict call, which submits a new unlabelled datapoint, and returns its predicted label. Being mathematicians at heart we never just report the label alone, but also provide you with estimated probabilities, so you can know how confident we are in each prediction.

An update call, which submits to our API a new datapoint, its timestamp and label.

To make it easier for you we can also accept multiple datapoints in one request. Our webservice for both predict calls is extremely fast and scalable, and our premiums service can support even the most demanding real-time applications, via Amazon Kinesis.

To sum up:

no need for classifier selection and tuning (window size or otherwise)

no need for you to host the decision engine for your web app

no need for you to monitor the performance of your model and decide when and how to retrain it — our self-tuning engine monitors performance and ensures it remains optimal at all times

no need for you to scale out as you get more data

For the enthusiasts out there, we can expose more control parameters, as well optionally return the updated decision rule as an XML file after an update call. We can also expose monitoring parameters such as the recent error rate or AUC (although we prefer to measure classification performance by the H-measure as well as a metric of how fast your data is aging.) If you want to be part of the private beta, please get in touch.

Feature extraction and unstructured data

Often datasets require a feature extraction step before they can be turned into the right format for classification. This step can be another crucial factor in performance. Our experienced data science team can support this on a consulting basis.

If your dataset or tasks involve unstructured data, such as free text, images, or networks, do not despair!. We can support feature extraction steps on all such data types, either using our own bespoke software or via integration with Alchemy API, as we are an IBM Watson Ecosystem Partner.

What else is coming?

Classification is a supervised technique: a bit like a child, the classifier needs to be shown labelled examples of the ground truth in order to extrapolate for the future. But children also learn by themselves, without explicit guidance by gradually developing an understanding of “normal behaviour” of their environment, and responding appropriately to any “anomalous events”.

This is also true of machine learning algorithms. For example, in cybersecurity, the most dangerous attacks come in the form of previously unseen types of threats (zero-day). A supervised classifier is by construction hopeless against such novel threats. Similarly, new types of fraud appear every day; new types of bugs are are introduced in software; and new customer behaviours can develop on a weekly basis.

When everything changes, profiling and anomaly detection is a necessary complement to agile, adaptive classification technology. This is why our core product, datastream.io, is designed to perform unsupervised profiling and anomaly detection at scale, as a service. Please stay tuned for our next blog post on this topic.

Originally published at www.ment.at on 10-Dec-2015